If you are implementing Veeam Backup & Replication you will sooner or later (and it will be sooner…) have to take a closer look at the different available transport modes.

Here you can find my “lessons learned” about this topic in an outline of the main points (these tables are continually adapted):

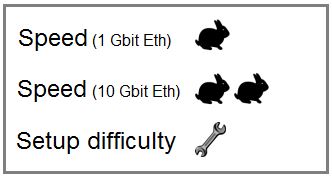

Direct Storage Access = SAN Mode

Pros:

fastest backup/fastest Full VM restore -> first choice for large environments

most reliable and predictable backup performance

zero impact on vSphere hosts

zero impact on your production network as backup traffic remains in the SAN fabric

Cons:

SAN restore only supports thick disks!

a danger of re-signature of your VMFS LUNs (operating error caused by an Admin!)

more administrative effort to keep MPIO software, firmware, and drivers on proxies and hosts up2date

requires a physical server

maybe difficult to configure (think about FC Zoning)

cannot be used for incremental restore!

Prerequisites:

install Veeam Proxy role on a physical server with direct FC or iSCSI access to your SAN

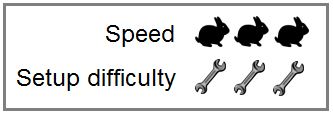

Hot Add (Virtual Appliance)

Pros:

fast in small and medium environments

no physical HW necessary

first choice for smaller environments or if SAN mode is not available

good choice for 1Gbit Ethernet configurations as proxy performs a source-side data deduplication + compression

Cons:

consumes resources on your vSphere cluster (it’s a VM…)

may cause “consolidation needed” issues and/or leave hidden snapshots (orphaned snapshots)

may cause a stun to “sensitive” VMs like SQL Database servers when performing configuration changes

hot remove may cause an extended stun in NFS environments

can be slow in large clusters due to necessary configuration changes in the virtual machine (consider required time for hot-add operations)

if VMs reside on local datastores you need one proxy per ESXi host -> otherwise NBD

Prerequisites:

install Veeam Proxy role on a VM in the same cluster/on the same host as the VMs you are backing up

Note:

install at least one proxy per cluster

more proxies will enhance multi-processing of virtual machines/VM disks (= the more proxies the better the performance)

add an extra SCSI controller to allow for more VM disks processing in parallel and increase Veeam proxy default setting (default = 4)

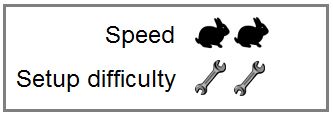

NBD (Network)

Pros:

no physical HW necessary

no additional configuration necessary (will always work)

good choice if 10Gb or better Ethernet connection is available

good choice for a workload with low change rates

good choice for NFS-based storage systems as it reduces VM stunning

if “Automatic selection” for transport mode is selected and all other modes fail, Veeam always uses NBD at least

Cons:

backup traffic affects the ESXi management interface as it transports VM data through the VMkernel interface

typically limited to using up to 40% of the bandwidth available on the corresponding VMkernel interfaces

vSphere will throttle backup traffic via ESXi management interface

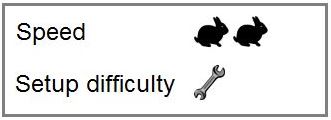

Direct NFS

introduced with Veeam Backup & Replication 9

Pros:

recommended mode for VMs whose disks are located on NFS datastores

an alternative to NDB mode

Cons:

backup proxy must have access to the NFS datastores where the VM disks reside

cannot be used for VMs that have at least one open snapshot!

You think something is missing or wrong?

Just drop me an email, leave a comment or write me a DM on Twitter (@lessi001) and I will add/correct it!